Isomap: A Nonlinear Dimensionality Discount Approach

Isomap: A Nonlinear Dimensionality Discount Approach

Associated Articles: Isomap: A Nonlinear Dimensionality Discount Approach

Introduction

With enthusiasm, let’s navigate by means of the intriguing subject associated to Isomap: A Nonlinear Dimensionality Discount Approach. Let’s weave attention-grabbing data and provide contemporary views to the readers.

Desk of Content material

Isomap: A Nonlinear Dimensionality Discount Approach

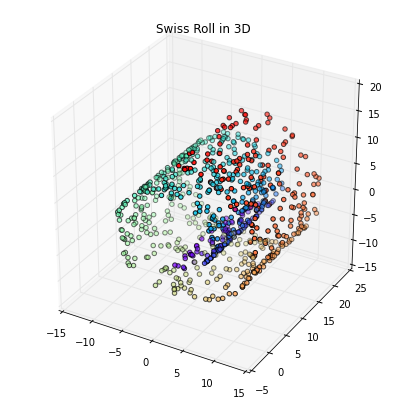

Dimensionality discount is a vital preprocessing step in lots of machine studying functions. Excessive-dimensional information, whereas probably wealthy in data, typically suffers from the curse of dimensionality – elevated computational complexity, decreased statistical energy, and the danger of overfitting. Linear dimensionality discount methods, corresponding to Principal Element Evaluation (PCA), successfully deal with this concern for information exhibiting linear relationships between variables. Nevertheless, many real-world datasets exhibit inherent nonlinear constructions that linear strategies fail to seize. Isomap (Isometric Function Mapping) emerges as a robust answer, providing a nonlinear method to dimensionality discount that preserves the worldwide geodesic distances between information factors.

This text gives a complete overview of Isomap, exploring its underlying ideas, algorithmic steps, benefits, limitations, and functions. We’ll delve into its mathematical basis, examine it with different dimensionality discount methods, and focus on sensible concerns for its implementation.

Understanding the Isomap Algorithm

Isomap leverages the idea of geodesic distances – the shortest distances alongside the floor of a manifold – to embed high-dimensional information right into a lower-dimensional house whereas preserving these distances. Not like linear strategies that depend on Euclidean distances within the unique house, Isomap acknowledges the potential for nonlinear relationships throughout the information. The algorithm could be summarized in three key steps:

1. Developing a Neighborhood Graph: Step one entails making a neighborhood graph representing the native connectivity of the information factors. That is usually performed utilizing certainly one of two strategies:

-

ε-neighborhood graph: Every information level is related to all different factors inside a specified radius ε. The selection of ε is essential and influences the ensuing embedding. A too-small ε can result in disconnected elements, whereas a too-large ε can blur the underlying manifold construction.

-

k-nearest neighbors graph: Every information level is related to its k-nearest neighbors primarily based on Euclidean distance. The selection of ok equally impacts the end result, with a smaller ok probably lacking vital connections and a bigger ok probably smoothing out high quality particulars.

The perimeters on this graph signify the native neighborhood relationships between information factors. The weights assigned to those edges are usually the Euclidean distances between the related factors.

2. Computing Geodesic Distances: The second essential step entails calculating the geodesic distances between all pairs of information factors. These distances signify the shortest paths alongside the graph, successfully capturing the worldwide construction of the information manifold. That is achieved utilizing a shortest-path algorithm, corresponding to Dijkstra’s algorithm or Floyd-Warshall algorithm. The ensuing distance matrix displays the true distances between information factors, contemplating the nonlinear relationships embedded within the information.

3. Embedding into Low-Dimensional House: The ultimate step entails embedding the information factors right into a lower-dimensional house (usually 2D or 3D for visualization) whereas preserving the geodesic distances calculated within the earlier step. That is usually completed utilizing classical multidimensional scaling (MDS), a way that goals to discover a configuration of factors within the lower-dimensional house that finest approximates the geodesic distance matrix. MDS minimizes the stress operate, which measures the distinction between the geodesic distances within the high-dimensional house and the Euclidean distances within the low-dimensional house.

Mathematical Formulation:

Let X be the information matrix with n information factors in a d-dimensional house. The Isomap algorithm could be summarized mathematically as follows:

-

Neighborhood Graph Building: Assemble a graph G = (V, E) the place V = 1, …, n represents the information factors, and E represents the sides connecting neighboring factors primarily based on both ε-neighborhood or k-nearest neighbors.

-

Geodesic Distance Calculation: Compute the geodesic distance matrix D, the place Dij represents the shortest path distance between information factors i and j within the graph G. That is usually calculated utilizing Dijkstra’s algorithm or Floyd-Warshall algorithm.

-

Classical Multidimensional Scaling (MDS): Apply MDS to the geodesic distance matrix D to acquire a low-dimensional embedding Y ∈ Rn x m, the place m is the specified dimensionality (m < d). MDS minimizes the stress operate:

Stress(Y) = √(∑i<j (Dij – ||yi – yj||2)2 / ∑i<j Dij2)

the place yi and yj are the embedded coordinates of information factors i and j within the m-dimensional house.

Benefits of Isomap:

- Nonlinearity: Isomap successfully handles nonlinear relationships within the information, in contrast to linear methods like PCA.

- International Construction Preservation: By contemplating geodesic distances, Isomap captures the worldwide construction of the information manifold, offering a extra correct illustration of the underlying relationships.

- Visualization: The low-dimensional embedding produced by Isomap is commonly appropriate for visualization, permitting for a greater understanding of the information’s construction.

- Manifold Studying: Isomap is a robust manifold studying approach, able to uncovering the underlying manifold construction embedded in high-dimensional information.

Limitations of Isomap:

- Computational Complexity: The computation of geodesic distances could be computationally costly, notably for big datasets. The Floyd-Warshall algorithm has a cubic time complexity, whereas Dijkstra’s algorithm is mostly extra environment friendly however nonetheless scales poorly with the variety of information factors.

- Parameter Sensitivity: The selection of parameters (ε or ok) considerably influences the end result. Cautious choice of these parameters is essential for acquiring significant outcomes. Cross-validation methods are sometimes employed to find out optimum parameter values.

- Computational Bottleneck: The shortest path computation is a serious computational bottleneck. Approximations and heuristics are sometimes obligatory for large-scale datasets.

- Sensitivity to Noise: Isomap could be delicate to noise within the information, which may have an effect on the accuracy of the geodesic distance calculations and the ensuing embedding. Preprocessing steps, corresponding to noise discount methods, are sometimes helpful.

- Knowledge Distribution Assumptions: Isomap assumes that the information lies on a low-dimensional manifold embedded in a high-dimensional house. This assumption might not at all times maintain true for all datasets.

Comparability with Different Dimensionality Discount Methods:

Isomap is distinct from different dimensionality discount methods in its method. Whereas PCA focuses on preserving variance and linear relationships, Isomap prioritizes the preservation of geodesic distances, capturing nonlinear constructions. t-SNE (t-distributed Stochastic Neighbor Embedding) is one other standard nonlinear approach, however it focuses on preserving native neighborhood relationships slightly than world geodesic distances. Regionally Linear Embedding (LLE) additionally focuses on native neighborhood relationships, however it reconstructs every information level as a linear mixture of its neighbors. These variations result in completely different strengths and weaknesses relying on the particular traits of the information.

Purposes of Isomap:

Isomap has discovered functions in numerous fields, together with:

- Picture Evaluation: Lowering the dimensionality of picture information for environment friendly storage and retrieval.

- Bioinformatics: Analyzing gene expression information and protein constructions.

- Robotics: Movement planning and robotic management.

- Laptop Imaginative and prescient: Object recognition and scene understanding.

- Knowledge Visualization: Visualizing high-dimensional information for exploratory information evaluation.

Conclusion:

Isomap is a robust nonlinear dimensionality discount approach that successfully captures the worldwide construction of information embedded in a high-dimensional house. Its means to protect geodesic distances permits for the uncovering of complicated nonlinear relationships which are typically missed by linear strategies. Whereas computational complexity and parameter sensitivity pose limitations, Isomap stays a invaluable instrument in numerous fields, notably when coping with information exhibiting inherent nonlinear constructions. Additional analysis and improvement proceed to handle its limitations and lengthen its applicability to even bigger and extra complicated datasets. The selection between Isomap and different dimensionality discount methods relies upon closely on the particular traits of the information and the objectives of the evaluation. Cautious consideration of those elements is essential for choosing probably the most applicable technique.

.jpg)

.jpg)

Closure

Thus, we hope this text has supplied invaluable insights into Isomap: A Nonlinear Dimensionality Discount Approach. We recognize your consideration to our article. See you in our subsequent article!